About the Journal

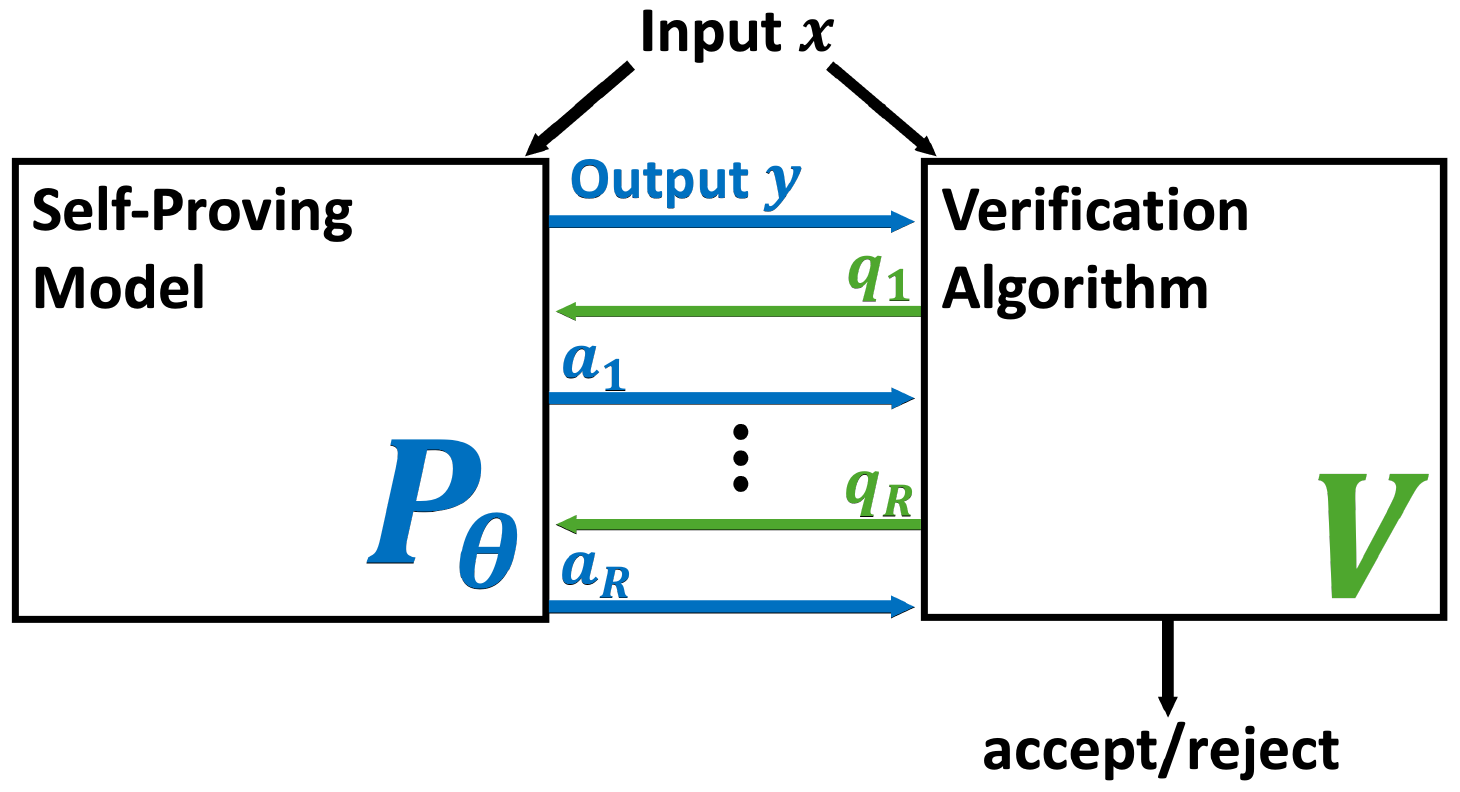

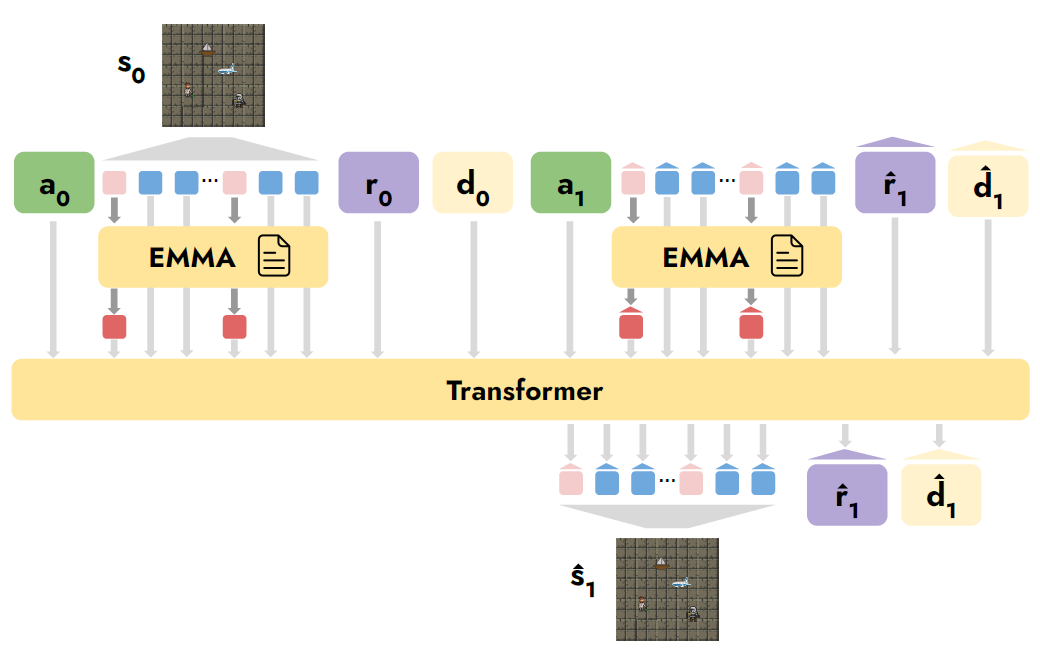

As we progress toward artificial general intelligence (AGI), assuring safe interaction between AGI and humans is increasingly urgent and important. To us, safe interaction means provably safe, i.e. via mathematical proofs, the laws of physics, or guaranteed safe via modeling and simulation. We focus on ’high-risk’ AGI.

AGI™ is open-access. We publish key developments in AGI and AGI safety: theory, technology, and governance. Submissions of articles, expert commentary, editorials, letters, and book reviews are welcome. Article processing charges are currently waived. Typically, authors retain copyright under CC-Attribution-NoDerivs 4.0.

Current Issue

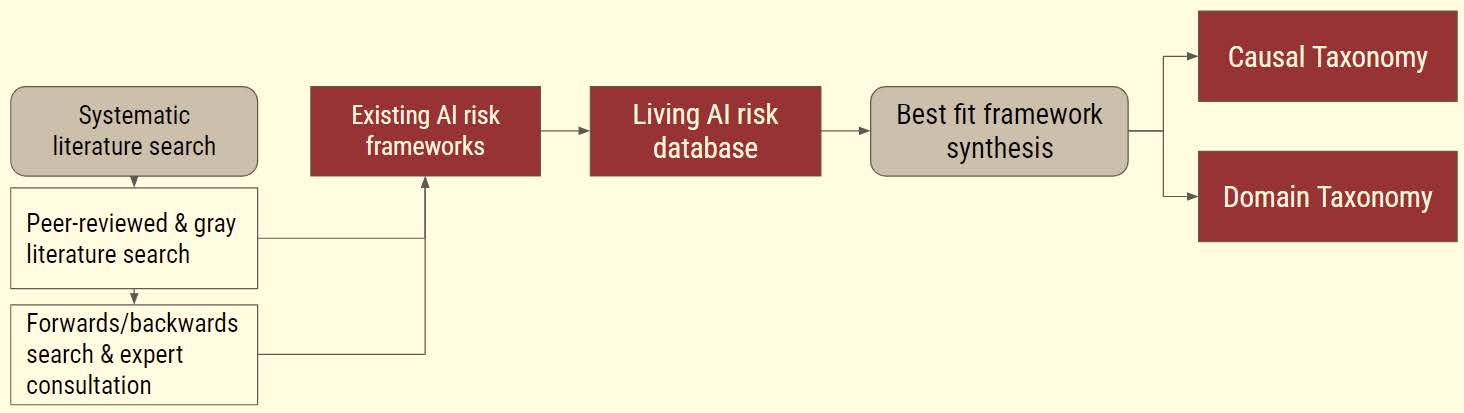

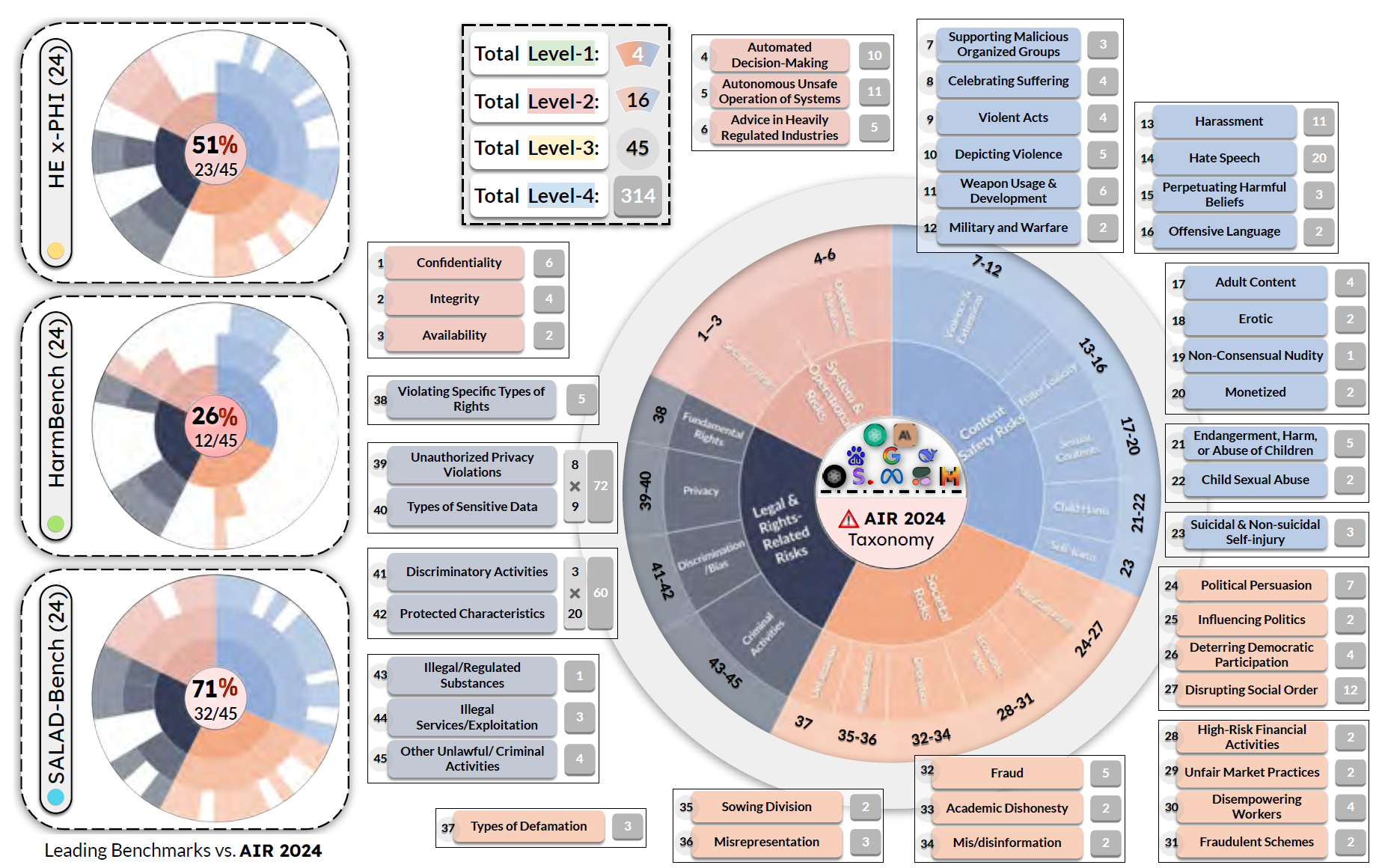

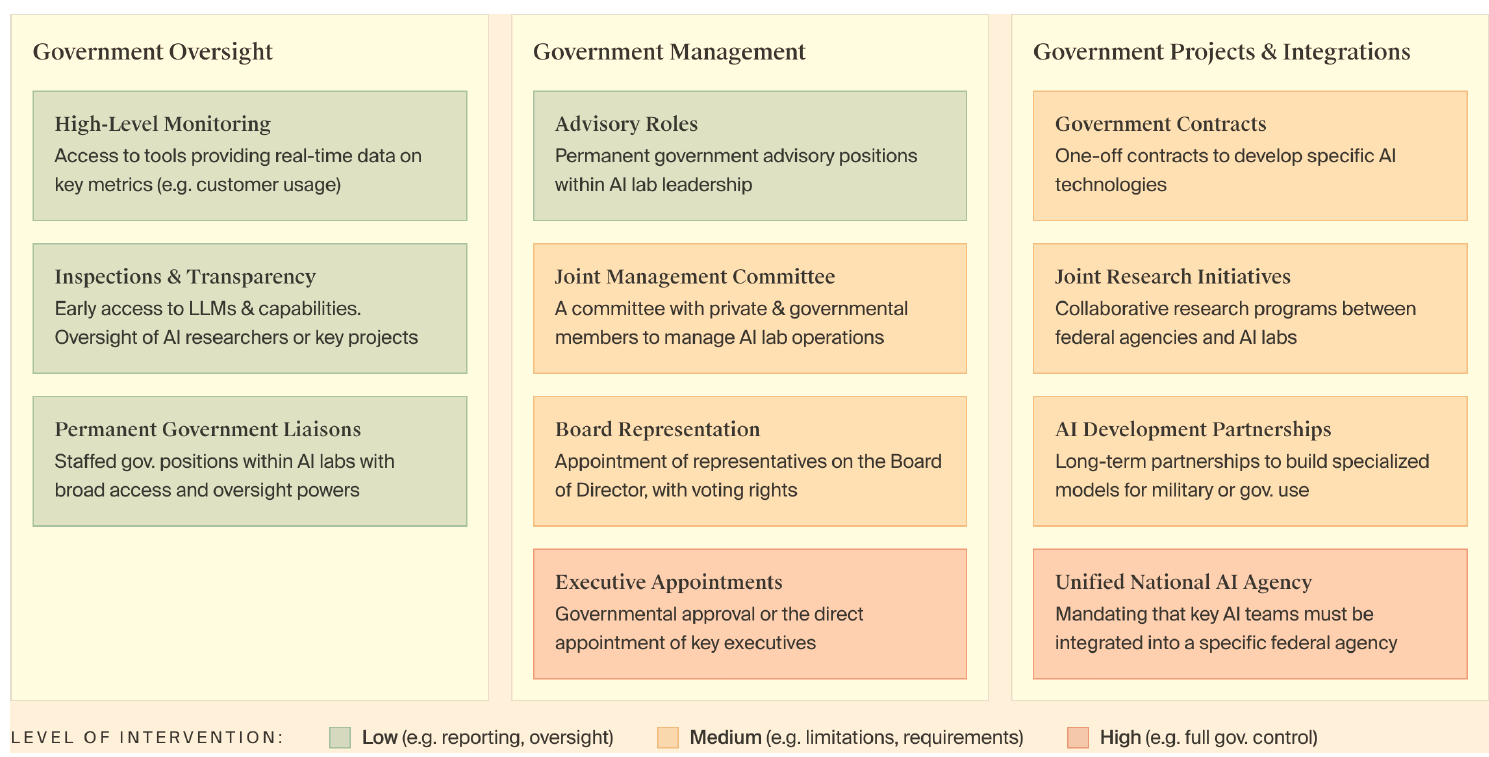

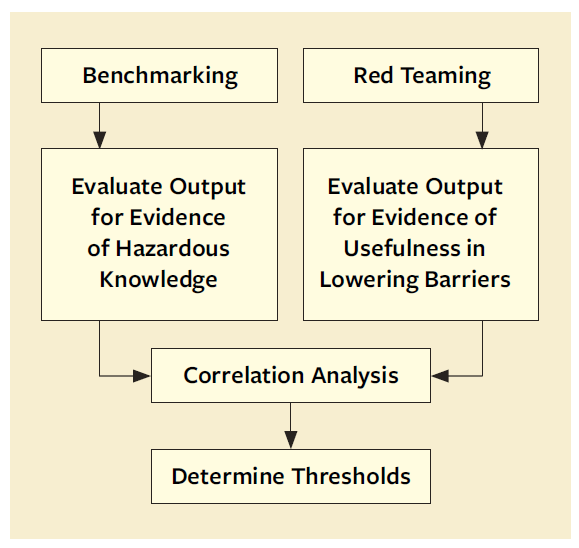

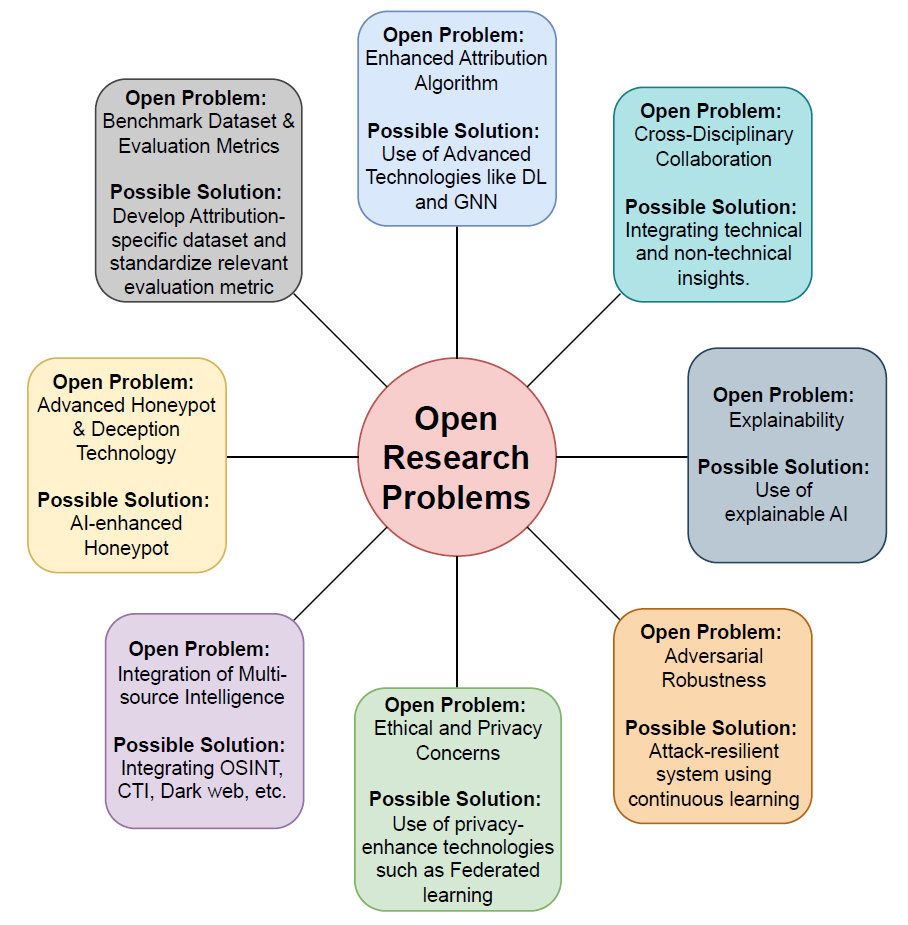

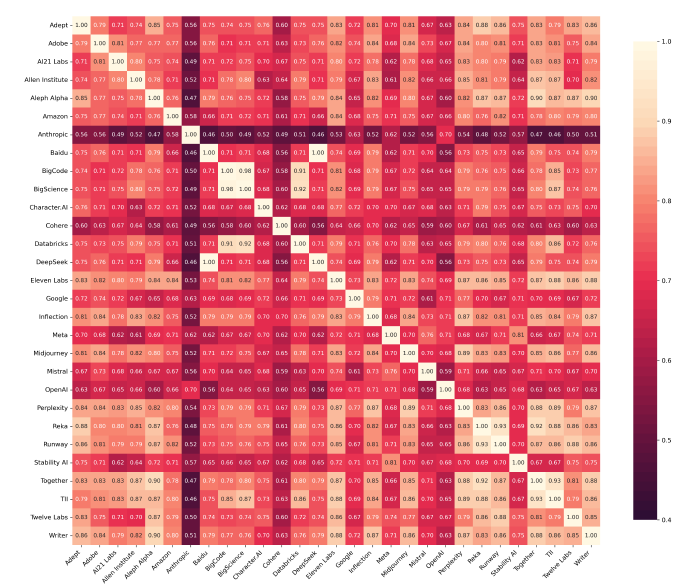

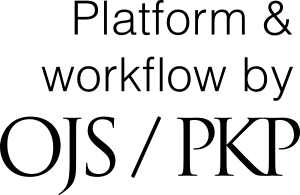

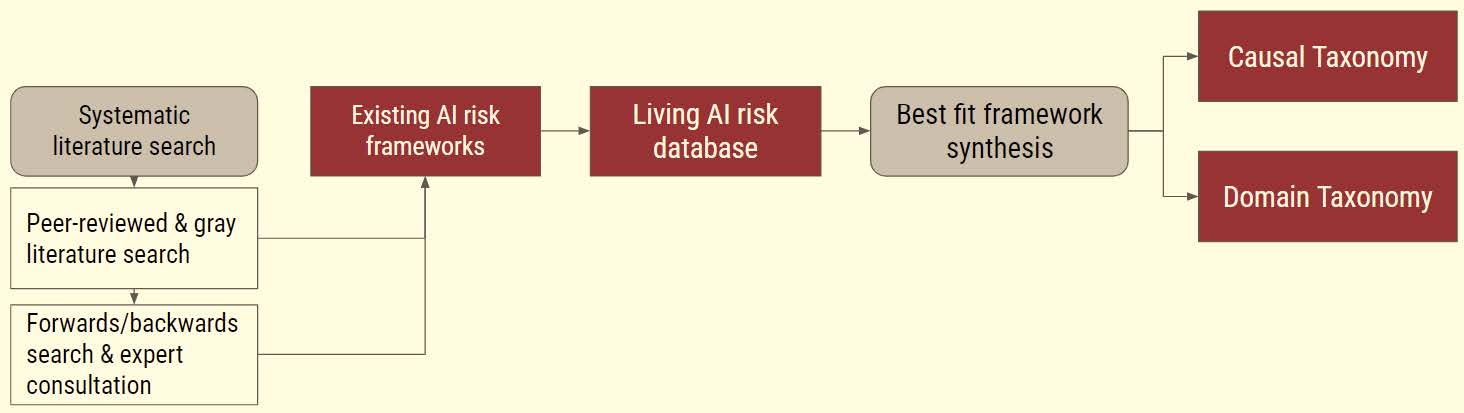

Vol. 1 No. 1 (2024): Artificial General Intelligence Risks, Governance, Methods

The first issue of AGI focuses on Risks, Governance, and Safety & Alignment Methods.

Published:

2024-10-09