Language-Guided World Models: A Model-Based Approach to AI Control

Keywords:

LLM, world model, AGI, artificial general intelligence, agi safety, agi value alignmentAbstract

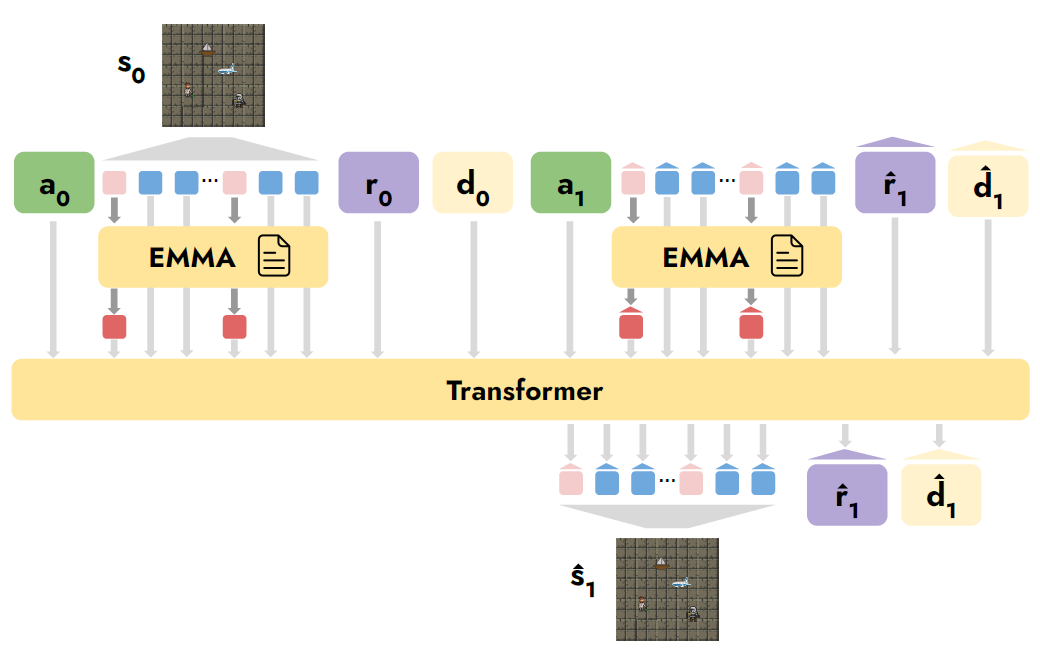

This paper introduces the concept of Language-Guided World Models (LWMs)—probabilistic models that can simulate environments by reading texts. Agents equipped with these models provide humans with more extensive and efficient control, allowing them to simultaneously alter agent behaviors in multiple tasks via natural verbal communication. In this work, we take initial steps in developing robust LWMs that can generalize to compositionally novel language descriptions. We design a challenging world modeling benchmark based on the game of MESSENGER (Hanjie et al., 2021), featuring evaluation settings that require varying degrees of compositional generalization. Our experiments reveal the lack of generalizability of the state-of-the-art Transformer model, as it offers marginal improvements in simulation qual-ity over a no-text baseline. We devise a more robust model by fusing the Transformer with the EMMA attention mechanism (Hanjie et al., 2021). Our model substantially outperforms the Transformer and approaches the performance of a model with an oracle semantic parsing and grounding capability. To demonstrate the practicality of this model in improving AI safety and transparency, we simulate a scenario in which the model enables an agent to present plans to a human before execution, and to re-vise plans based on their language feedback.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Alex Zhang, Khanh Nguyen

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.