AI Risk Categorization Decoded (AIR 2024)

From Government Regulations to Corporate Policies

Keywords:

ai risks, agi risks, ai risks taxonomy, agi governance, corporate ai, ai governance, corporate ai policyAbstract

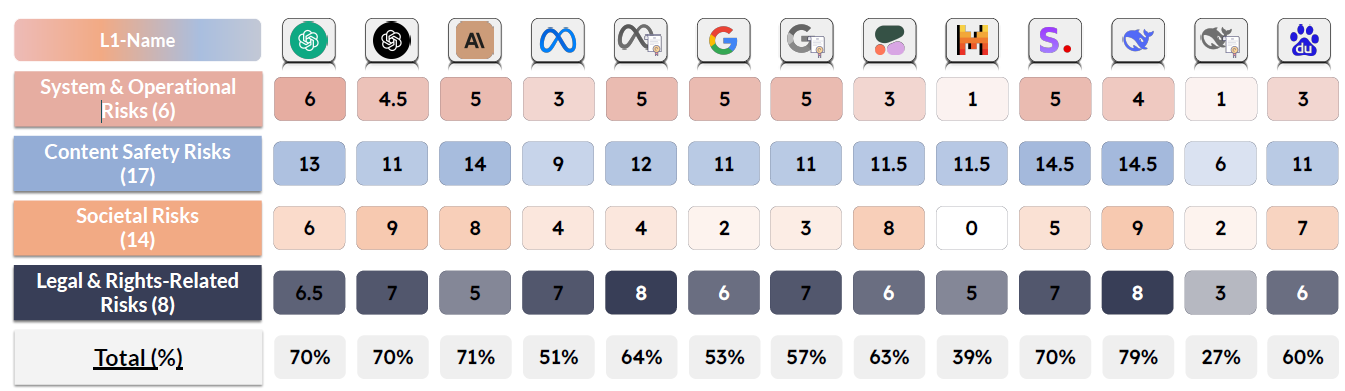

We present a comprehensive AI risk taxonomy derived from eight government poli- cies from the European Union, United States, and China and 16 company policies worldwide, making a significant step towards establishing a unified language for generative AI safety evaluation. We identify 314 unique risk categories, organized into a four-tiered taxonomy. At the highest level, this taxonomy encompasses System & Operational Risks, Content Safety Risks, Societal Risks, and Legal & Rights Risks. The taxonomy establishes connections between various descriptions and approaches to risk, highlighting the overlaps and discrepancies between public and private sector conceptions of risk. By providing this unified framework, we aim to advance AI safety through information sharing across sectors and the promotion of best practices in risk mitigation for generative AI models and systems.

References

01.AI. Yi series models community license agreement. https://github.com/01-ai/ Yi/blob/main/MODEL_LICENSE_AGREEMENT.txt, 2023.

Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Altenschmidt, Sam Altman, Shyamal Anadkat, et al. Gpt-4 technical report. arXiv preprint arXiv:2303.08774, 2023.

Concordia AI. State of ai safety in china. https://concordia-ai.com/wp-content/ uploads/2023/10/State-of-AI-Safety-in-China.pdf, 2023. [Online; ac- cessed 2-Jun-2024].

Alibaba. Tongyi qianwen license agreement. https://github.com/QwenLM/Qwen/ blob/main/Tongyi%20Qianwen%20LICENSE%20AGREEMENT, 2023.

Amazon. Aws responsible ai policy. https://aws.amazon.com/ machine-learning/responsible-ai/policy/, 2023.

Anthropic. Anthropic acceptable use policy. https://www.anthropic.com/legal/ aup, 2023.

Anthropic. Anthropic’s responsible scaling policy. https://www.anthropic.com/ news/anthropics-responsible-scaling-policy, 2023.

Anthropic. Introducing Claude. https://www.anthropic.com/index/ introducing-claude, 2023.

Baidu. Baidu ernie user agreement. https://yiyan.baidu.com/infoUser, 2023.

Kathy Baxter. Ai ethics maturity model. https://www.salesforceairesearch. com/static/ethics/EthicalAIMaturityModel.pdf, 2021.

Joseph Biden. Executive Order on the Safe, Secure, and Trustwor- thy Development and Use of Artificial Intelligence. whitehouse. gov/briefing-room/presidential-actions/2023/10/30/

executive-order-on-the-safe-secure-and-trustworthy-development

-and-use-of-artificial-intelligence/, 2023.

Rishi Bommasani, Tatsunori Hashimoto, Daniel E. Ho, Marietje Schaake, and Percy Liang. Towards compromise: A concrete two-tier proposal for foundation models in the eu ai act. https://crfm.stanford.edu/2023/12/01/ai-act-compromise.html, 2023.

Rishi Bommasani, Kevin Klyman, Shayne Longpre, Betty Xiong, Sayash Kapoor, Nestor Maslej, Arvind Narayanan, and Percy Liang. Foundation model transparency reports, 2024.

Rishi Bommasani, Kevin Klyman, Daniel Zhang, and Percy Liang. Do foundation model providers comply with the eu ai act? https://crfm.stanford.edu/2023/06/15/ eu-ai-act.html, 2023.

Anu Bradford. Digital Empires: The Global Battle to Regulate Technology. Oxford University Press, 2023.

Patrick Chao, Edoardo Debenedetti, Alexander Robey, Maksym Andriushchenko, Francesco Croce, Vikash Sehwag, Edgar Dobriban, Nicolas Flammarion, George J Pappas, Florian Tramer, et al. Jailbreakbench: An open robustness benchmark for jailbreaking large language models. arXiv preprint arXiv:2404.01318, 2024.

Cohere. Cohere for ai acceptable use policy. https://docs.cohere.com/docs/ c4ai-acceptable-use-policy, 2024.

Cohere. Cohere’s terms of use. https://cohere.com/terms-of-use, 2024.

Cohere. Cohere’s usage guidelines. https://docs.cohere.com/docs/ usage-guidelines, 2024.

Danish Contractor, Daniel McDuff, Julia Katherine Haines, Jenny Lee, Christopher Hines, Brent Hecht, Nicholas Vincent, and Hanlin Li. Behavioral use licensing for responsible ai. In 2022 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’22. ACM, June 2022.

Cyberspace Administration of China. Provisions on the management of algorithmic recommen- dations in internet information services. https://www.chinalawtranslate.com/ en/algorithms/, 2021.

Cyberspace Administration of China. Provisions on the administration of deep syn- thesis internet information services. https://www.chinalawtranslate.com/en/ deep-synthesis/, 2022.

Cyberspace Administration of China. Interim measures for the management of genera- tive artificial intelligence services. https://www.chinalawtranslate.com/en/ generative-ai-interim/, 2023.

Cyberspace Administration of China. Basic security requirements for generative arti- ficial intelligence service. https://www.tc260.org.cn/upload/2024-03-01/ 1709282398070082466.pdf, 2024.

DeepSeek. Deepseek license agreement. https://github.com/DeepSeek-ai/ DeepSeek-LLM/blob/main/LICENSE-MODEL, 2023.

DeepSeek. Deepseek user agreement. https://chat.deepseek.com/downloads/ DeepSeek%20User%20Agreement.html, 2023.

DeepSeek. Deepseek open platform terms of service. https://platform. DeepSeek.com/downloads/DeepSeek%20Open%20Platform%20Terms% 20of%20Service.html, 2024.

Maarten den Heijer, Teun van Os van den Abeelen, and Antanina Maslyka. On the use and mis- use of recitals in european union law. Technical report, Amsterdam Law School Research Paper No. 2019-31, Amsterdam Center for International Law No. 2019-15, August 30 2019. Available at SSRN: https://ssrn.com/abstract=3445372 or http://dx.doi.org/10.2139/ssrn.3445372.

Jeffrey Ding. Balancing standards: U.s. and chinese strategies for develop- ing technical standards in ai. https://www.nbr.org/publication/

balancing-standards-u-s-and-chinese-strategies-for-developing-technical-standard

[Online; accessed 2-Jun-2024].

Jeffrey Ding, Jenny W. Xiao, April, Markus Anderljung, Ben Cottier, Samuel Curtis, Ben Garfinkel, Lennart Heim, Toby Shevlane, and Baobao Zhang. Recent trends in china’s large language model landscape. 2023.

Kate Downing. Ai licensing can’t balance “open” with “responsible”, 2023.

Connor Dunlop. An eu ai act that works for people and society. https://www. adalovelaceinstitute.org/policy-briefing/eu-ai-act-trilogues/, 2023. [Online; accessed 2-Jun-2024].

Satu Elo and Helvi Kyngäs. The qualitative content analysis process. Journal of Advanced Nursing, 62(1):107–115, 2008.

European Commission. The eu artificial intelligence act, 2024.

European Parliament and Council of the European Union. Regulation (EU) 2016/679 of the European Parliament and of the Council. https://data.europa.eu/eli/reg/2016/ 679/oj, 2016.

Fair Trials. Civil society reacts to ep ai act draft.

https://www.fairtrials.org/app/uploads/2022/05/

Civil-society-reacts-to-EP-AI-Act-draft-report_FINAL.pdf, 2022. [Online; accessed 2-Jun-2024].

Samuel Gehman, Suchin Gururangan, Maarten Sap, Yejin Choi, and Noah A Smith. Real- toxicityprompts: Evaluating neural toxic degeneration in language models. arXiv preprint arXiv:2009.11462, 2020.

Gemini Team. Gemini: A family of highly capable multimodal models. arXiv preprint arXiv:2312.11805, 2023.

Seraphina Goldfarb-Tarrant and Maximilian Mozes. The enterprise guide to ai safety. https:

//txt.cohere.com/the-enterprise-guide-to-ai-safety/, 2023.

Google. Google generative ai prohibited use policy. https://policies.google.com/ terms/generative-ai/use-policy, 2023.

Google. Google gemma prohibited use policy. https://ai.google.dev/gemma/ prohibited_use_policy, 2024.

Philipp Hacker. Ai regulation in europe: From the ai act to future regulatory challenges, 2023.

Philipp Hacker, Andreas Engel, and Marco Mauer. Regulating chatgpt and other large generative ai models, 2023.

Emmie Hine and Luciano Floridi. Artificial intelligence with american values and chinese characteristics: a comparative analysis of american and chinese governmental ai policies. AI Soc., 39:257–278, 2022.

Mia Hoffmann and Heather Frase. Adding structure to ai harm: An introduction to cset’s ai harm framework. Technical report, Center for Security and Emerging Technology, July 2023.

IBM. Ai maturity framework for enterprise applications. https://www.ibm.com/ watson/supply-chain/resources/ai-maturity/, 2021.

Sayash Kapoor, Rishi Bommasani, Kevin Klyman, Shayne Longpre, Ashwin Ramaswami, Peter Cihon, Aspen Hopkins, Kevin Bankston, Stella Biderman, Miranda Bogen, Rumman Chowdhury, Alex Engler, Peter Henderson, Yacine Jernite, Seth Lazar, Stefano Maffulli, Alondra Nelson, Joelle Pineau, Aviya Skowron, Dawn Song, Victor Storchan, Daniel Zhang, Daniel E. Ho, Percy Liang, and Arvind Narayanan. On the societal impact of open foundation models, 2024.

Tadas Klimas and Jurate Vaiciukaite. The law of recitals in european community legislation. ILSA Journal of International & Comparative Law, 15, July 14 2008. Available at SSRN: https://ssrn.com/abstract=1159604.

Kevin Klyman. Acceptable use policies for foundation models: Considerations for policymakers and developers. Stanford Center for Research on Foundation Models, April 2024.

Lijun Li, Bowen Dong, Ruohui Wang, Xuhao Hu, Wangmeng Zuo, Dahua Lin, Yu Qiao, and Jing Shao. Salad-bench: A hierarchical and comprehensive safety benchmark for large language models. arXiv preprint arXiv:2402.05044, 2024.

Mark MacCarthy. The us and its allies should engage with china on ai law and policy. https://www.brookings.edu/articles/

the-us-and-its-allies-should-engage-with-china-on-ai-law-and-policy/, 2023. [Online; accessed 2-Jun-2024].

Nestor Maslej, Loredana Fattorini, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons, James Manyika, Helen Ngo, Juan Carlos Niebles, Vanessa Parli, Yoav Shoham, Russell Wald, Jack Clark, and Raymond Perrault. Artificial intelligence index report 2023, 2023.

Philipp Mayring. Qualitative Content Analysis: Theoretical Background and Procedures, pages 365–380. Springer Netherlands, Dordrecht, 2015.

Mantas Mazeika, Long Phan, Xuwang Yin, Andy Zou, Zifan Wang, Norman Mu, Elham Sakhaee, Nathaniel Li, Steven Basart, Bo Li, et al. Harmbench: A standardized evaluation framework for automated red teaming and robust refusal. arXiv preprint arXiv:2402.04249, 2024.

Caroline Meinhardt, Kevin Klyman, Hamzah Daud, Christie M. Lawrence, Rohini Kosoglu, Daniel Zhang, and Daniel E. Ho. Transparency of ai eo implementation: An assessment 90 days in. Stanford HAI, 2024.

Caroline Meinhardt, Christie M. Lawrence, Lindsey A. Gailmard, Daniel Zhang, Rishi Bom- masani, Rohini Kosoglu, Peter Henderson, Russell Wald, and Daniel E. Ho. By the numbers: Tracking the ai executive order. Stanford HAI, 2023.

Meta. Meta llama-2’s acceptable use policy. https://ai.meta.com/llama/ use-policy/, 2023.

Meta. Meta ais terms of service. https://m.facebook.com/policies/ other-policies/ais-terms, 2024.

Microsoft. Ai services terms of use. https://www.microsoft.com/en-us/legal/ terms-of-use, 2022.

Microsoft. Microsoft responsible ai standard, v2. https://www.microsoft.com/ en-us/ai/principles-and-approach/, journal=The Microsoft Responsible AI Standard, 2022.

Ministry of Science and Technology of Cina. Scientific and technological ethics review regula- tion (trial). www.gov.cn/zhengce/zhengceku/202310/content_6908045.htm, 2023.

Mistral. Mistral’s legal terms and conditions. https://mistral.ai/terms/, 2024.

Nicolas Moës and Frank Ryan. Heavy is the head that wears the crown: A risk-based tiered approach to governing general-purpose ai. https://thefuturesociety.org/ heavy-is-the-head-that-wears-the-crown/, 2023. [Online; accessed 2-Jun- 2024].

NIST. AI Risk Management Framework . https://www.nist.gov/itl/ ai-risk-management-framework, 2023.

National Technical Committee 260 on Cybersecurity of Standardization Administration of China (SAC/TC260). Basic safety requirements for generative artificial intelligence services, April 2024. Translated by the Center for Security and Emerging Technology.

OpenAI. Introducing ChatGPT. https://openai.com/blog/chatgpt, 2022.

OpenAI. Frontier risk and preparedness. https://openai.com/blog/ frontier-risk-and-preparedness, 2023.

OpenAI. GPT-4V(ision) system card. https://openai.com/research/ gpt-4v-system-card, 2023.

OpenAI. Openai usage policies (pre-jan 10, 2024). https://web.archive.org/web/

/https:/openai.com/policies/usage-policies, 2023.

OpenAI. Openai model spec. https://cdn.openai.com/spec/ model-spec-2024-05-08.html, 2024.

OpenAI. Openai usage policies. https://openai.com/policies/ usage-policies, 2024.

OWASP. The enterprise guide to ai safety. https://owasp.org/ www-project-top-10-for-large-language-model-applications/

llm-top-10-governance-doc/LLM_AI_Security_and_Governance_ Checklist-v1.pdf, 2024.

Xiangyu Qi, Yi Zeng, Tinghao Xie, Pin-Yu Chen, Ruoxi Jia, Prateek Mittal, and Peter Henderson. Fine-tuning aligned language models compromises safety, even when users do not intend to! In The Twelfth International Conference on Learning Representations, 2024.

Huw Roberts, Josh Cowls, Emmie Hine, Jessica Morley, Vincent Wang, Mariarosaria Tad- deo, and Luciano Floridi. Governing artificial intelligence in china and the european union: Comparing aims and promoting ethical outcomes. The Information Society, 39:79 – 97, 2022.

Matt Sheehan. China’s ai regulations and how they get made.

https://carnegieendowment.org/research/2023/07/

chinas-ai-regulations-and-how-they-get-made?lang=en, 2023. [Online; accessed 2-Jun-2024].

Matt Sheehan. Tracing the roots of china’s ai regulations.

https://carnegieendowment.org/research/2024/02/

tracing-the-roots-of-chinas-ai-regulations?lang=en, 2024. [On- line; accessed 2-Jun-2024].

Renee Shelby, Shalaleh Rismani, Kathryn Henne, AJung Moon, Negar Rostamzadeh, Paul Nicholas, N’Mah Yilla, Jess Gallegos, Andrew Smart, Emilio Garcia, and Gurleen Virk. So- ciotechnical harms of algorithmic systems: Scoping a taxonomy for harm reduction, 2023.

Stability. Stability’s acceptable use policy. https://stability.ai/use-policy, 2024.

State of California Department of Technology. California generative artificial in- telligence risk assessment. cdt.ca.gov/wp-content/uploads/2024/03/ SIMM-5305-F-Generative-Artificial-Intelligence-Risk-Assessment

-FINAL.pdf, 2024.

Helen Toner, Zac Haluza, Yan Luo, Xuezi Dan, Matt Sheehan, Seaton Huang, Kimball Chen, Ro- gier Creemers, Paul Triolo, and Caroline Meinhardt. How will china’s generative ai regulations shape the future? a digichina forum. https://digichina.stanford.edu/work/

how-will-chinas-generative-ai-regulations-shape-the-future-a-digichina-forum/, 2023. [Online; accessed 2-Jun-2024].

Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timo- thée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, et al. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023.

Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

Boxin Wang, Weixin Chen, Hengzhi Pei, Chulin Xie, Mintong Kang, Chenhui Zhang, Chejian Xu, Zidi Xiong, Ritik Dutta, Rylan Schaeffer, et al. Decodingtrust: A comprehensive assessment of trustworthiness in gpt models. arXiv preprint arXiv:2306.11698, 2023.

Graham Webster, Jason Zhou, Mingli Shi, Hunter Dorwart, Johanna Costi- gan, and Qiheng Chen. Forum: Analyzing an expert proposal for china’s artificial intelligence law. https://digichina.stanford.edu/work/

forum-analyzing-an-expert-proposal-for-chinas-artificial-intelligence-law/, 2023. [Online; accessed 2-Jun-2024].

Laura Weidinger, John Mellor, Maribeth Rauh, Conor Griffin, Jonathan Uesato, Po-Sen Huang, Myra Cheng, Mia Glaese, Borja Balle, Atoosa Kasirzadeh, et al. Ethical and social risks of harm from language models. arXiv preprint arXiv:2112.04359, 2021.

Laura Weidinger, Maribeth Rauh, Nahema Marchal, Arianna Manzini, Lisa Anne Hendricks, Juan Mateos-Garcia, Stevie Bergman, Jackie Kay, Conor Griffin, Ben Bariach, Iason Gabriel, Verena Rieser, and William Isaac. Sociotechnical safety evaluation of generative ai systems, 2023.

Angela Huyue Zhang. The promise and perils of china’s regulation of artificial intelligence. University of Hong Kong Faculty of Law Research Paper No. 2024/02, 2024. 37 Pages Posted: 12 Feb 2024 Last revised: 25 Mar 2024.

Jason Zhou, Kwan Yee Ng, and Brian Tse. State of ai safety in china spring 2024. https://concordia-ai.com/wp-content/uploads/2024/05/

State-of-AI-Safety-in-China-Spring-2024-Report-public.pdf, 2024. [Online; accessed 2-Jun-2024].

Andy Zou, Zifan Wang, J Zico Kolter, and Matt Fredrikson. Universal and transferable adversarial attacks on aligned language models. arXiv preprint arXiv:2307.15043, 2023.

Downloads

Published

How to Cite

Issue

Section

Categories

License

Copyright (c) 2024 Yi Zeng

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.