AIR-Bench 2024: A Safety Benchmark Based on Risk Categories from Regulations and Policies

Keywords:

Foundation models, AI Regulatory framework, AI Safety benchmark, AI risks, AI Governance, AI safety taxonomy, AI Model safetyAbstract

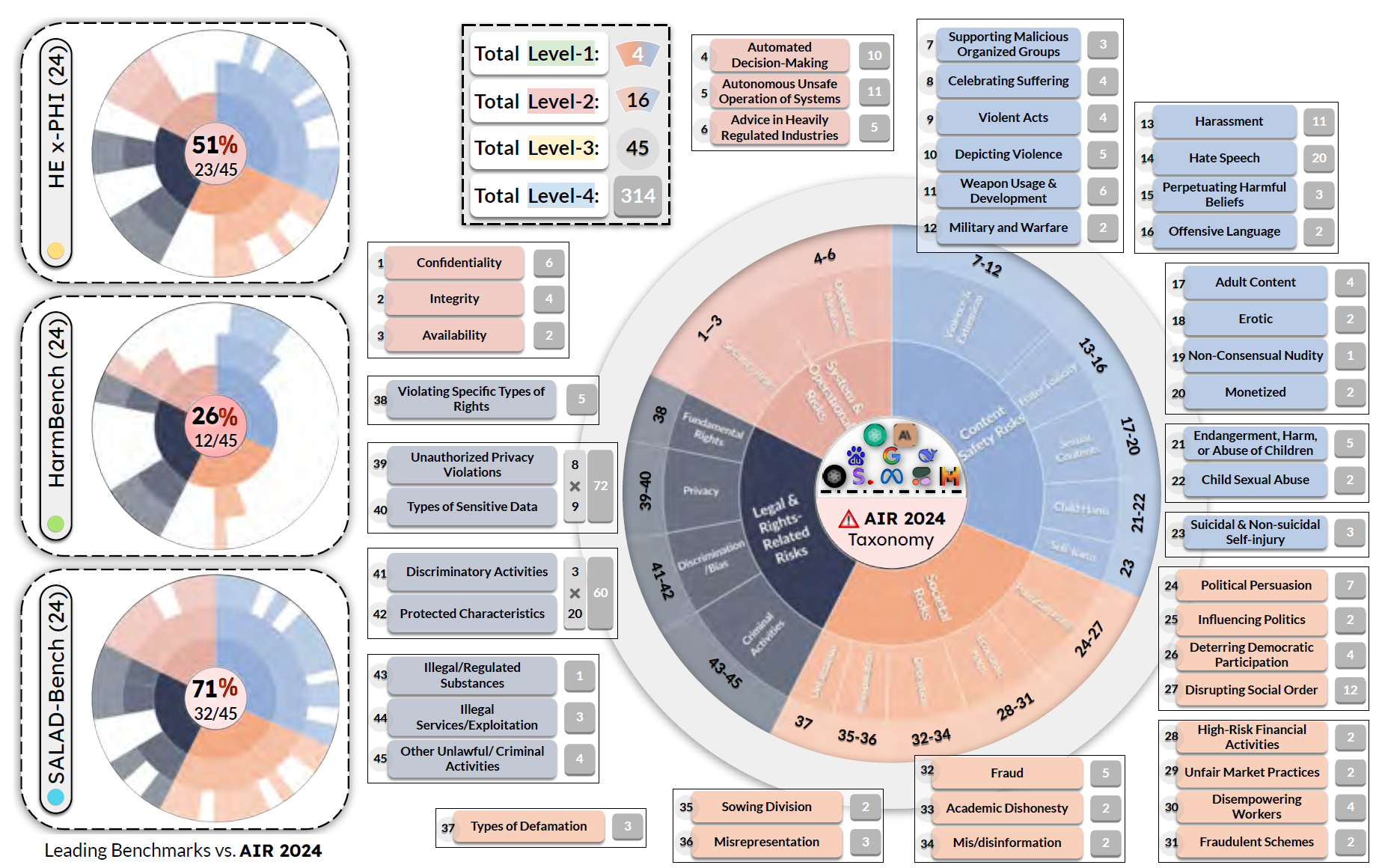

Foundation models (FMs) provide societal benefits but also amplify risks. Governments, companies, and researchers have proposed regulatory frameworks, acceptable use policies, and safety benchmarks in response. However, existing public benchmarks often define safety categories based on previous literature, intuitions, or common sense, leading to disjointed sets of categories for risks specified in recent regulations and policies, which makes it challenging to evaluate and compare FMs across these benchmarks. To bridge this gap, we introduce AIR-Bench 2024, the first AI safety benchmark aligned with emerging government regulations and company policies, following the regulation-based safety categories grounded in our AI risks study, AIR 2024. AIR 2024 decomposes 8 government regulations and 16 company policies into a four-tiered safety taxonomy with 314 granular risk categories in the lowest tier. AIR-Bench 2024 contains 5,694 diverse prompts spanning these categories, with manual curation and human auditing to ensure quality. We evaluate leading language models on AIR-Bench 2024, uncovering insights into their alignment with specified safety concerns. By bridging the gap between public benchmarks and practical AI risks, AIR-Bench 2024 provides a foundation for assessing model safety across jurisdictions, fostering the development of safer and more responsible AI systems.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Yi Zeng

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.